- 8-bit CPU: github.com/ramyadhadidi/tt06-8bit-cpu

- 8-bit CIM: github.com/ramyadhadidi/tt07-8bit-vector-compute-in-SRAM

Taping Out ASICs: My First Hardware-Design Related Entry

It might seem redundant to those who know me, but it may not be obvious to everyone familiar with my background: I can design chips. I have a bachelor’s degree in Electrical Engineering (EE) and, instead of learning to code in object-oriented languages, I first learned Assembly, Verilog, and C. This might not seem significant to my software engineering friends, but understanding concepts from a lower level gives a much deeper appreciation for everything. Training a large workforce to code in Python is relatively easy, but training individuals to code in Verilog is far more challenging. This requires years of studying how electrical circuits work and experimenting with them, which cannot be condensed into a single class. Learning this way also gives me a deeper understanding of the code we write every day (e.g., in Python) and how many things are done inefficiently given the hardware they are executed on.

Recently, I decided to revive my hardware design experience for two reasons:

- I work at a hardware startup designing an accelerator, so it gives me a better exposure to what comes after microarchitecture design (e.g., layout, routing).

- I attended a talk by Jason Cong (UCLA) where he mentioned the shortage of hardware engineers compared to software engineers and the urgent need for them. This need is especially crucial given the CHIPS Act and the volatile future of TSMC. Building a robust hardware industry is much harder, particularly for advanced nodes.

Given that my hardware design skills might be more valuable than my software skills, I decided to revive them. My daily job involves computer architecture, which is my PhD training and more, focusing on extracting performance from custom hardware units. This includes the tasks any accelerator, including GPUs, performs. However, I had not delved deeply into ASICs, except for a few courses involving FPGAs and ASICs. I have more experience with FPGAs from various jobs and papers, but I had not directly worked with taped-out ASICs. Some of my papers include layouts, but we never taped them out. TinyTapeout gave me the chance to tape out a design in a 130nm Skywater (a US-based facility) and be part of a shuttle for a small fee.

What is TinyTapeout? TinyTapeout is a platform that allows users to tape out a design in an ASIC (Application-Specific Integrated Circuit) and have it delivered on a test board with an RP2040 Raspberry Pi Pico for control. It provides an accessible way for individuals and small teams to create custom hardware, learn about chip design, and test their projects in a real-world environment.

What is a Shuttle? Each IC you see has a rectangular silicon die in it. That die was once part of a round silicon wafer. Typically, if you have many customers, you buy a wafer and tape out the same design multiple times (Economics of Computer Architecture 101!). However, for small businesses and individuals, buying a wafer is expensive, and you likely don’t need 30 dies—you might just need one or two. So, some intermediary companies gather several designs and tape them out on a single wafer. This way, you collectively buy a wafer with other users, and a middleman manages the wafer tapeout. You just provide your design to the middleman, and they deliver the die to you. TinyTapeout acts as this middleman, but they also nicely package your die (putting it in the standard black plastic IC packages) and even provide a test board.

How Much Do These Designs Cost? The first design, which occupies a single tile, costs around $100. The second design, which spans four tiles (2x2), costs around $300.

What’s the Tile Size? 160x100 um

What’s the PDK? Open-source Skywater 130nm, here.

8-bit Single-Cycle Microprocessor on TinyTapeout Shuttle 6

This project involves the design and implementation of an 8-bit single-cycle microprocessor. The processor includes a register file and an Arithmetic Logic Unit (ALU). I did not specifically include memory operations, as it was more complicated to manage communication with the RP2040 on the test chip for those functions. The design handles a simple instruction set architecture (ISA) that supports basic ALU operations, load/store operations to registers, and status checks for the ALU carry. The entire design was crafted in less than a week. I reused some of my code from my old 5-stage pipelined MIPS processor from 2013, but this design is just a single stage. More details here in this repo.

ISA Overview

The ISA is focused on register operations and basic arithmetic/logic functions. Below is the breakdown of the instruction set:

- MVR: Move Register

- LDB: Load Byte into Register

- STB: Store Byte from Register

- RDS: Read (store) processor status

- NOT, AND, ORA, ADD, SUB, XOR, INC: Various ALU operations

Layout and Statistics

| Utilisation (%) | Wire length (um) |

|---|---|

| 56.74 | 30920 |

| Category | Cells | Count |

|---|---|---|

| Fill | decap fill | 1007 |

| Combo Logic | and3b a21oi a31oi and4b a22o a221o and4bb a31o a2111o o21a nand3b a21o a211oi or3b o21ai o211a o22a o2bb2a a2111oi a32o a22oi o31a or2b a21bo o32a a2bb2o o311a and2b a311o nor2b o21ba o22ai o221a o211ai o21bai a211o o2111a a221oi | 277 |

| Tap | tapvpwrvgnd | 225 |

| Buffer | buf clkbuf | 197 |

| Flip Flops | dfrtp | 121 |

| Multiplexer | mux2 | 119 |

| AND | and2 and4 and3 | 38 |

| NAND | nand2 nand3 nand2b | 34 |

| NOR | nor2 nor4 nor3 xnor2 | 32 |

| OR | or3 or2 or4 xor2 | 29 |

| Misc | dlygate4sd3 dlymetal6s2s conb | 21 |

| Inverter | inv | 11 |

| Clock | clkinv | 1 |

More details in this action.

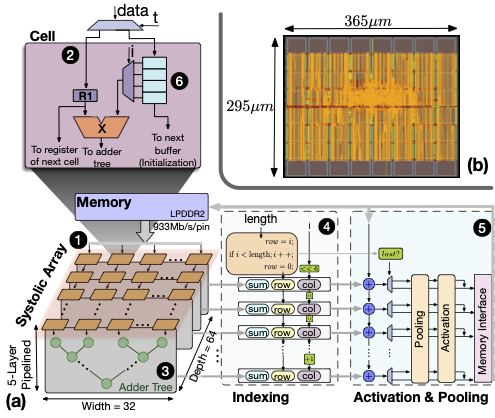

TinyTapeout Shuttle 7: 8-bit Vector Compute in SRAM Multiplier

This project is a vector multiplier with stationary weights, implemented on 4 tiles in TinyTapeout. The design, tests, and documentation were completed in around 10 hours. To my surprise, this project was much easier to complete. Perhaps because the design had fewer connections with the outside world for its ISA, or maybe I was just getting better. It includes 8 multiply-and-add (MAC) units, each with two registers, and an adder tree that sums the multiplication results of an 8-element vector without precision loss. More details here in this repo.

This compute paradigm is loosely termed processing in/near memory (PIM/PNM) or Compute in Memory (CIM). It’s not a new concept, and I had a few papers on it in 2017, which was the third wave of interest. However, I believe this paradigm is here to stay, thanks to the memory-bound computations of new workloads such as large language models (LLMs).

Components and Operation

- MAC Units: Each unit multiplies weights and activations stored in two registers.

- Adder Tree: Hierarchically structured in three levels to sum the results efficiently.

- Readout Mechanism: The final sum is read out over multiple clock cycles due to the 8-bit width limitation of the output interface.

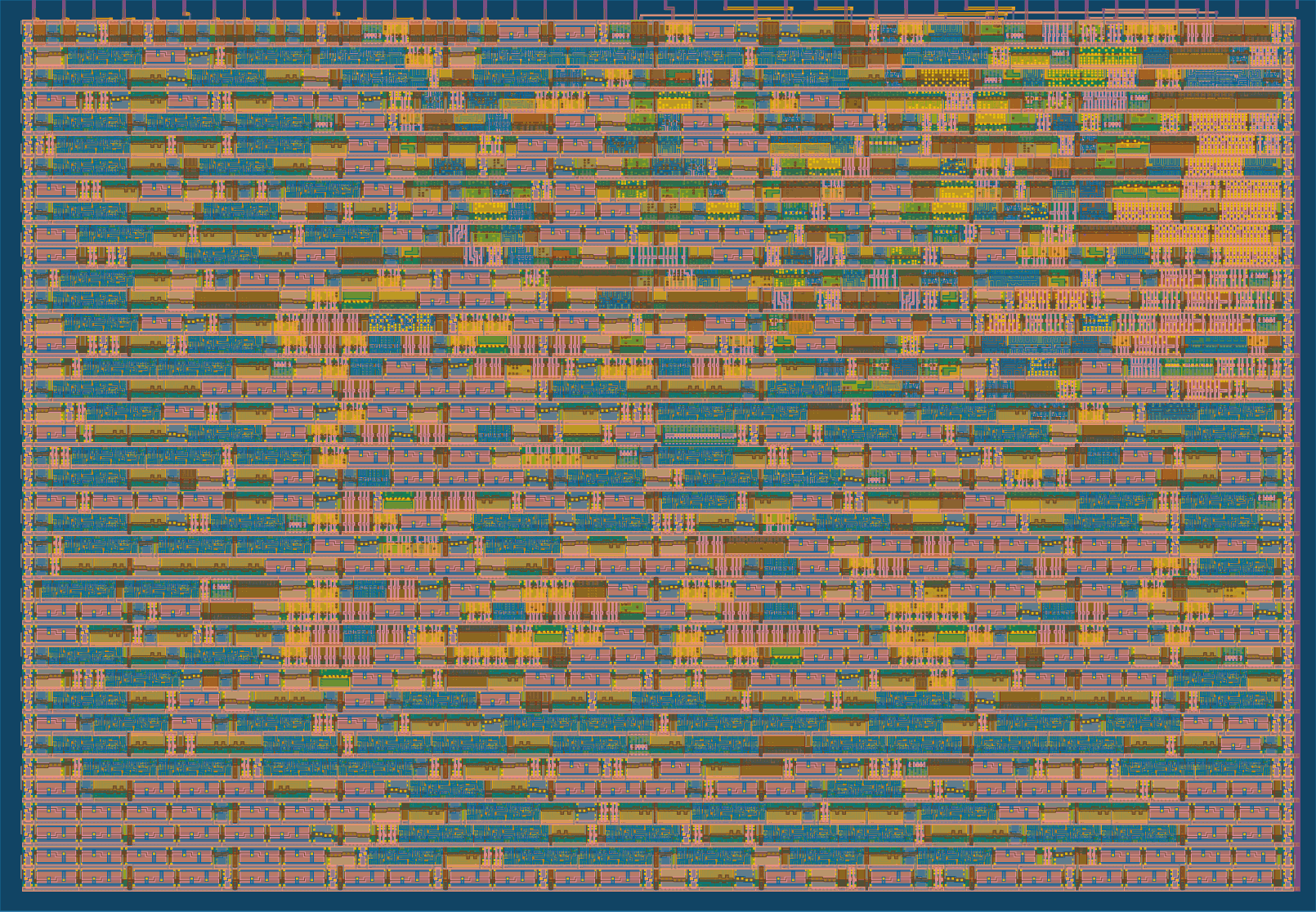

Layout and Statistics

| Utilisation (%) | Wire length (um) |

|---|---|

| 54.15 | 91602 |

| Category | Cells | Count |

|---|---|---|

| Fill | decap fill | 5412 |

| Combo Logic | o21ai a22oi o2bb2a and2b o21bai a21o a22o a32o o21ba o21a or3b or2b a21bo a31o a21oi a31oi o211a a211oi o211ai a211o nand3b o22ai a311o nand4b or4bb o31a o32a and4b o22a o311a or4b a41o o31ai and4bb a2bb2oi a2111o and3b nor3b a2bb2o o2bb2ai o2111a a221oi nor4b a2111oi nor2b a221o | 1291 |

| Tap | tapvpwrvgnd | 1037 |

| NOR | xnor2 nor2 nor3 nor4 | 629 |

| NAND | nand2b nand2 nand3 nand4 | 510 |

| AND | and4 and3 and2 a21boi | 442 |

| Buffer | buf clkbuf | 353 |

| OR | or2 or4 xor2 or3 | 331 |

| Flip Flops | dfrtp | 158 |

| Multiplexer | mux2 | 145 |

| Inverter | inv | 45 |

| Misc | dlymetal6s2s dlygate4sd3 conb | 43 |

| Diode | diode | 21 |

More details in this action.

Testing

Coding in Verilog is not sequential; you must have a view of the hardware. Unlike typical coding, where operations happen serially, Verilog describes hardware that operates inherently in parallel. Most of my time was spent debugging my Verilog code, and much of this was made easier by using cocotb. With cocotb, you can write testbenches in Python to run your design.

Testing the 8-bit Single-Cycle Microprocessor

The processor has been tested through a suite of 12 testbenches, each designed to validate a specific functionality or operation. These testbenches cover basic ALU operations, data movement between registers, and the load/store functionalities. Although basic operational tests are passing, timing interactions between instructions have not been exhaustively verified. It is anticipated that a sophisticated compiler would handle these timing considerations effectively, reminiscent of approaches taken in historical computing systems. You can view the tests here. Many tests are commented out since they access internal signals, which doesn’t work on a compiled code in GitHub.

Testing the Compute in SRAM Multiplier

The tests for the Compute in SRAM design are more elaborate. There are several tests under test/test.py to help anyone understand how the design works. Due to the limitation of access to external signals, most of the tests are commented out. Each test also contains its own commented Verilog code since some tests were initially developed to test and verify individual units such as MACs and adders. There are four categories of tests:

- Single MAC Operation Test: This test verifies the basic functionality of a single MAC unit, ensuring that it correctly performs multiplication and stores the result.

- Multiple MAC Units Loading Weights and Activations: This test checks that multiple MAC units can load weights and activations correctly and simultaneously. It ensures that each MAC unit receives and processes its assigned data independently of the others.

- Adder Tree Tests: These tests validate the correctness of the adder tree at all levels. They ensure that the outputs from the MAC units are correctly summed through the hierarchical adder tree structure, producing accurate intermediate and final sums.

- Read Result Tests: These tests focus on the readout circuit, verifying that the s_adder_tree result can be correctly read out in multiple 8-bit chunks. This ensures that the readout mechanism accurately reconstructs the full result over several clock cycles. These tests also focus on using only external signals, which is the only test not commented out in the final version.

The last three tests work on several test vectors to ensure correct operation with various numbers. These test vectors include a wide range of values and scenarios to thoroughly exercise the design and confirm its correctness under different conditions.